Ppo R N

The intricacies of data compression and its pivotal role in modern computing have led to the development of numerous algorithms, each designed to optimize storage and transfer efficiency. One such algorithm, albeit not directly related to the acronym “Ppo R N,” is the Huffman coding method, which assigns shorter codes to more frequently occurring characters in a dataset, thereby reducing the overall size of the data.

However, “Ppo R N” does not directly correspond to a widely recognized acronym in the field of data compression or computer science. It’s possible that this could be a typo or a reference to a very specific, perhaps proprietary, technology or concept not broadly known in the industry.

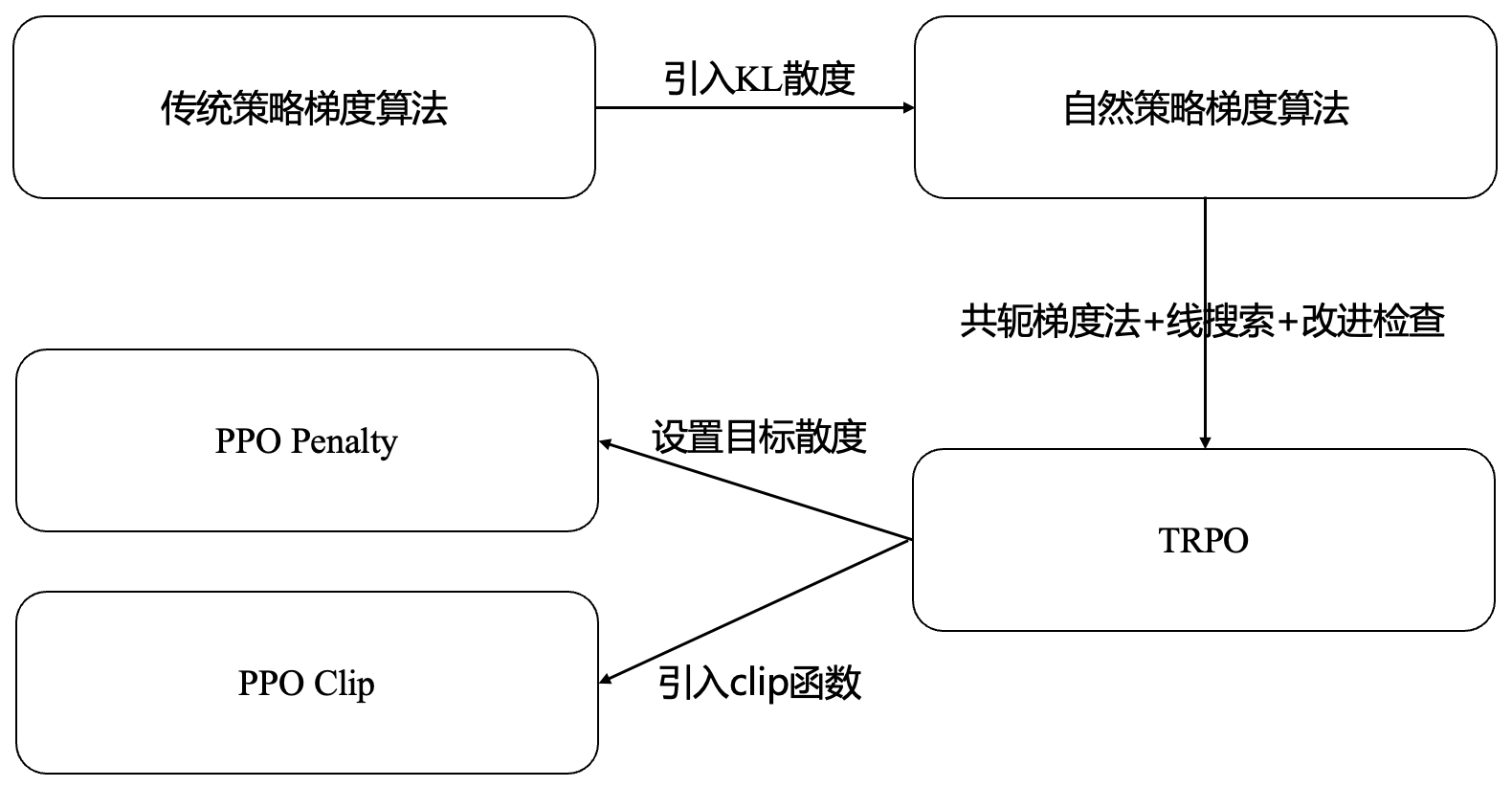

If we consider the realm of artificial intelligence (AI) and machine learning (ML), where algorithms are continually being developed and refined, “Ppo R N” might be interpreted as a misunderstanding or a mix-up of terms like “PPO,” which stands for Proximal Policy Optimization. PPO is a model-free, on-policy reinforcement learning algorithm that is known for its simplicity and effectiveness in training agents to perform a wide range of tasks, from playing video games to controlling robots.

Proximal Policy Optimization (PPO) is particularly noted for its ability to balance the trade-off between exploration and exploitation, allowing the agent to learn from its environment without diverging too far from the current policy, thus ensuring stable and efficient learning. This algorithm has been influential in the development of more sophisticated AI models, capable of complex decision-making and adaptation in dynamic environments.

The integration of such advanced AI models into various sectors, including healthcare, finance, and education, underscores the potential for AI to revolutionize how we approach problem-solving and decision-making. For instance, in healthcare, AI can help in the diagnosis of diseases by analyzing large datasets of patient information, leading to more accurate and timely interventions.

In the context of data analysis, which might be tangentially related to the interpretation of “Ppo R N,” the ability to compress and efficiently process large datasets is crucial. Techniques such as Principal Component Analysis (PCA) and Autoencoders are used for dimensionality reduction and data compression, enabling researchers and analysts to extract meaningful insights from vast amounts of data.

In conclusion, while “Ppo R N” does not directly relate to a known concept or technology, exploring related fields and technologies provides insight into the complex and innovative world of computer science and AI. The development and application of algorithms like PPO, along with advancements in data compression and analysis, are continually pushing the boundaries of what is possible with digital technology.

Advanced Technologies in AI and Data Compression

- Quantum Computing: The advent of quantum computing is expected to revolutionize data processing, offering exponentially faster computation times for certain types of problems. This could significantly impact fields like cryptography and complex optimization.

- Neural Networks: Inspired by the human brain, neural networks are pivotal in machine learning, enabling tasks such as image recognition, speech processing, and natural language processing with unprecedented accuracy.

- Cloud Computing: The shift towards cloud computing has made it possible for individuals and organizations to access vast computational resources on-demand, facilitating the development and deployment of complex AI models.

Implementation of Huffman Coding

For those interested in exploring data compression further, implementing a Huffman coding algorithm can be an educational and insightful project. Here’s a simplified overview of how one might approach this:

- Frequency Analysis: Begin by analyzing the frequency of each character in your dataset.

- Tree Construction: Based on the frequencies, construct a binary tree where the path to each leaf node represents the Huffman code for a character.

- Encoding: Use the Huffman codes to encode your data, replacing each character with its corresponding code.

- Decoding: To decode the data, traverse the Huffman tree based on the bits in the encoded data, arriving at a leaf node for each character.

Step 1: Analyze Character Frequencies

This involves counting how many times each character appears in your dataset.

Step 2: Build the Huffman Tree

Characters with lower frequencies are combined, forming a tree structure where the root represents the start of the code and each leaf node is a character.

Step 3: Generate Huffman Codes

Traverse the tree, assigning a 0 or 1 to each branch, resulting in a unique binary code for each character.

FAQ Section

What is the primary advantage of using Huffman coding for data compression?

+The primary advantage is that it allows for variable-length codes based on the frequency of characters, leading to more efficient compression compared to fixed-length codes.

How does Proximal Policy Optimization (PPO) contribute to the field of artificial intelligence?

+PPO is a reinforcement learning algorithm that helps in training agents to make decisions in complex environments. It is particularly valued for its ability to find a good balance between exploration and exploitation, leading to more stable and efficient learning processes.

The marriage of data compression techniques with advanced AI models like PPO is emblematic of the innovative strides being made in the tech industry. As these technologies continue to evolve, we can expect significant advancements in how we interact with and understand the world around us.