Intro

Discover 5 ways to count duplicates in datasets, leveraging data analysis, duplicate detection, and data cleansing techniques for accurate results and efficient data management.

Counting duplicates in a dataset is a common task that can be accomplished in several ways, depending on the tools and programming languages you are using. Here are five methods to count duplicates, each with its own strengths and suitable scenarios.

When dealing with datasets, whether it's for data analysis, scientific research, or business intelligence, identifying and counting duplicate entries is crucial for data cleaning and preprocessing. Duplicate records can skew analysis results, lead to incorrect conclusions, and waste resources if not properly managed. Let's dive into the methods for counting duplicates, exploring their applications and examples.

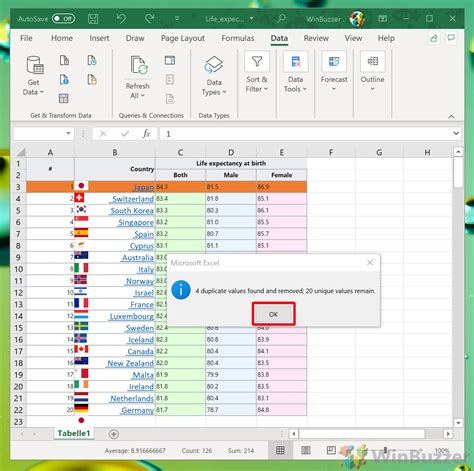

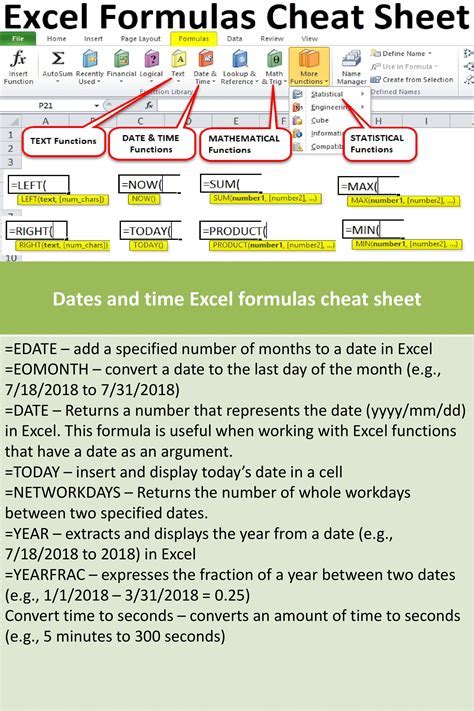

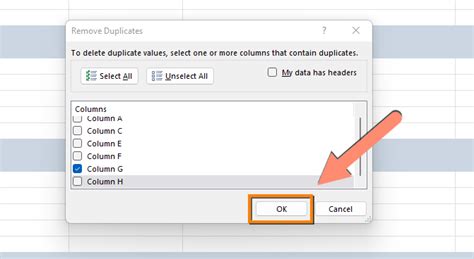

Method 1: Using Excel

Excel is one of the most widely used spreadsheet programs and offers several ways to count duplicates. One straightforward method involves using the COUNTIF function. For instance, if you have a list of names in column A and you want to count how many times each name appears, you can use the formula =COUNTIF(A:A, A2) in a new column next to each name, assuming the first name you want to check is in cell A2. This formula counts the number of cells in column A that match the value in cell A2.

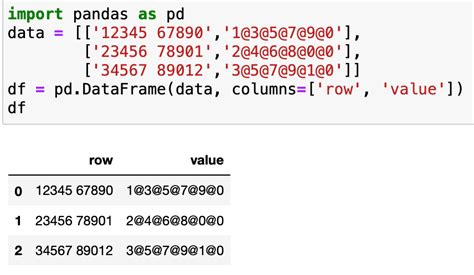

Method 2: Using Python

Python, with its extensive libraries such as Pandas, offers powerful tools for data manipulation and analysis. To count duplicates in a list or a DataFrame, you can use the value_counts() method. For example, if you have a list of items and you want to know how many times each item appears, you can convert the list to a Pandas Series and then apply value_counts() to get a series with the counts of each unique item.

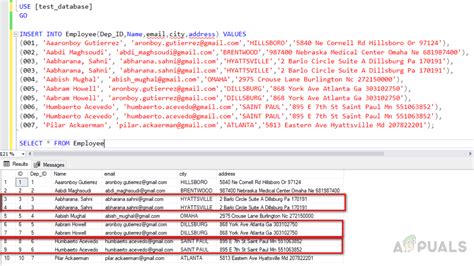

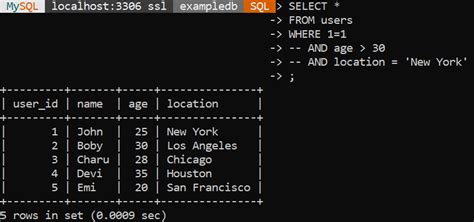

Method 3: Using SQL

SQL (Structured Query Language) is used for managing relational databases and can be used to count duplicates by employing the COUNT() function in combination with the GROUP BY clause. For instance, to count how many times each value appears in a column named 'column_name' in a table named 'table_name', you would use a query like: SELECT column_name, COUNT(*) as count FROM table_name GROUP BY column_name HAVING COUNT(*) > 1; This query returns each unique value in 'column_name' along with the number of times it appears, but only for values that appear more than once.

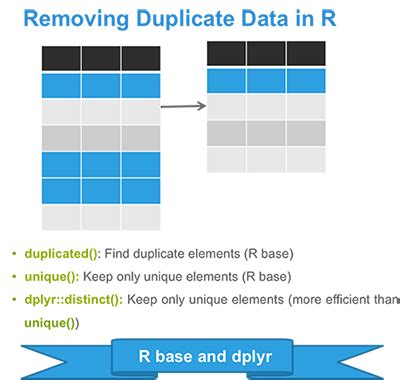

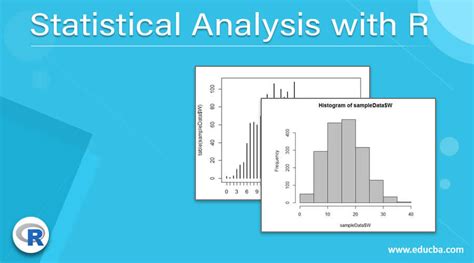

Method 4: Using R

R is a popular programming language for statistical computing and graphics. To count duplicates in R, you can use the table() function for simple cases or the dplyr package for more complex datasets. For example, if you have a vector of values, applying table() directly to it will give you a frequency table showing how many times each value appears.

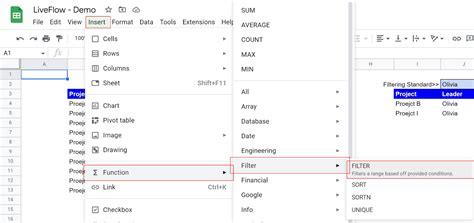

Method 5: Using Google Sheets

Google Sheets, similar to Excel, provides functions to count duplicates. You can use the COUNTIF function in a manner similar to Excel. Additionally, Google Sheets' QUERY function can be very powerful for counting duplicates, especially when dealing with larger datasets or when you need to perform more complex queries.

Choosing the Right Method

The choice of method depends on the context and the tools you are most comfortable with. For small datasets and quick analyses, Excel or Google Sheets might be the most convenient. For larger datasets or when automation is required, Python or R might be more suitable. SQL is ideal for database management and analysis.

Practical Examples and Statistical Data

- Excel Example: Suppose you have a list of 100 customer names, and you want to find out how many customers have the same name. Using the COUNTIF function, you can quickly identify duplicates and understand the distribution of names in your customer list.

- Python Example: In data science, counting duplicates can be crucial for understanding the distribution of categorical variables. For instance, in a dataset of movie genres, counting duplicates can help identify the most common genres.

Benefits and Working Mechanisms

Each method has its benefits:

- Excel and Google Sheets are great for manual, small-scale data analysis and are widely accessible.

- Python and R offer powerful scripting capabilities, ideal for large-scale data analysis and automation.

- SQL is perfect for managing and analyzing data within relational databases.

Steps for Implementation

- Identify Your Dataset: Determine the source and nature of your data.

- Choose Your Tool: Based on the size of your dataset and your comfort level, select the most appropriate method.

- Apply the Method: Use the chosen method to count duplicates, following the specific syntax and functions relevant to your tool.

- Analyze Results: Interpret the results to understand the distribution of your data and identify any duplicates.

Gallery of Duplicate Counting Methods

Duplicate Counting Methods Image Gallery

Frequently Asked Questions

What is the purpose of counting duplicates in a dataset?

+Counting duplicates helps in data cleaning and preprocessing, ensuring that analysis results are accurate and not skewed by redundant data.

How do I choose the best method for counting duplicates?

+The choice depends on the size of your dataset, the tools you are familiar with, and whether you need to automate the process. For small datasets, Excel or Google Sheets might suffice, while for larger datasets, Python or R could be more appropriate.

Can counting duplicates be automated?

+Yes, counting duplicates can be automated using scripting languages like Python or R. These languages offer libraries and functions that can efficiently count duplicates in large datasets.

In conclusion, counting duplicates is a fundamental step in data analysis that can significantly impact the accuracy and reliability of your findings. By understanding the different methods available and choosing the one that best fits your needs, you can ensure that your dataset is clean, consistent, and ready for analysis. Whether you're working with small datasets in Excel or managing large databases with SQL, the ability to efficiently count and manage duplicates is a valuable skill for any data professional. We invite you to share your experiences with counting duplicates, ask questions, or explore more topics related to data analysis and management. Your engagement and feedback are invaluable in helping us provide the most relevant and useful content for our readers.