Intro

Discover 5 ways to highlight duplicates in datasets, leveraging data analysis, duplicate detection, and data cleansing techniques to improve data quality and accuracy, and streamline data management processes efficiently.

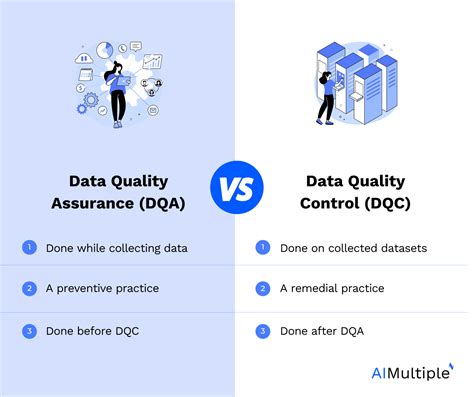

Highlighting duplicates in a dataset or a list is crucial for data analysis, data cleaning, and ensuring data integrity. Duplicates can lead to inaccurate analysis, inefficient use of resources, and poor decision-making. There are several ways to identify and highlight duplicates, depending on the tools and software you are using. Here, we will explore five common methods to highlight duplicates, particularly focusing on Microsoft Excel, a widely used spreadsheet program, but also touching upon other tools and programming languages.

The importance of identifying duplicates cannot be overstated. In databases, duplicates can skew statistical analyses and lead to incorrect conclusions. In business, duplicate records can result in wasted resources, such as sending multiple marketing materials to the same customer. Therefore, having efficient methods to identify and manage duplicates is essential for data quality and process optimization.

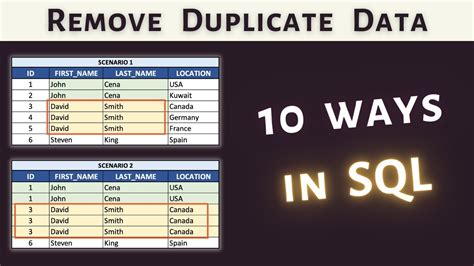

Before diving into the methods, it's worth noting that the approach to highlighting duplicates can vary significantly depending on the context and the tools available. For instance, in programming languages like Python, libraries such as Pandas offer powerful functionalities to detect and handle duplicates. Similarly, in databases, SQL queries can be used to identify duplicate rows based on specific criteria.

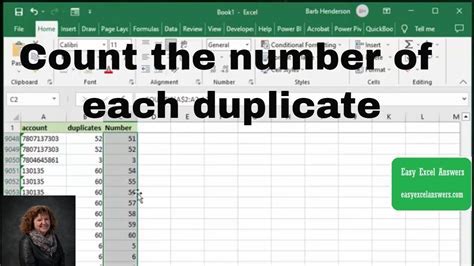

Method 1: Using Microsoft Excel

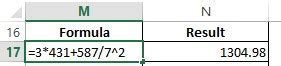

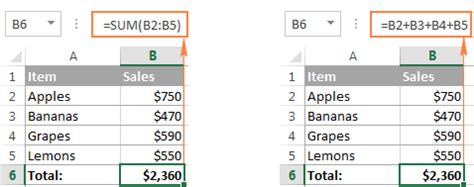

Method 2: Using Formulas in Excel

Method 3: Using Python

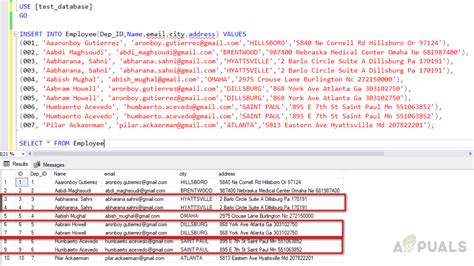

Method 4: Using SQL

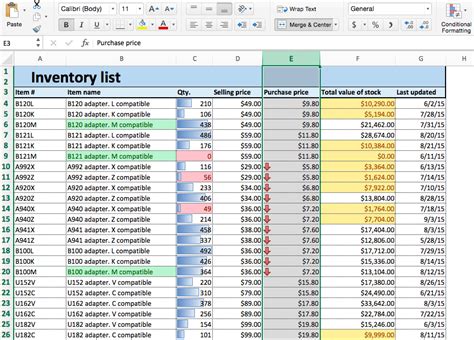

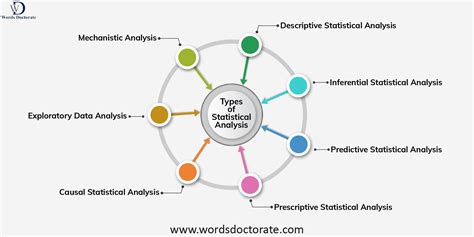

Method 5: Using Data Analysis Tools

Practical Examples and Statistical Data

- Excel Example: Suppose you have a list of customer IDs, and you want to find out how many customers have been listed more than once. Using the Conditional Formatting feature, you can quickly highlight these duplicates and then use filters to count them.

- Python Example: With Pandas, you can not only identify duplicates but also decide what action to take with them, such as dropping them or marking them for further review.

- SQL Example: If you're managing a database of user accounts, using SQL to identify duplicate email addresses can help prevent spam registrations and improve data quality.

Key Information Related to the Topic

- Benefits of Identifying Duplicates: Removing duplicates can significantly reduce the size of your dataset, making analysis faster and more accurate. It also helps in preventing errors in statistical models and ensures that each data point is unique and valuable.

- Working Mechanisms: Most methods of identifying duplicates rely on comparing values within a dataset. The efficiency of these methods can depend on the size of the dataset and the complexity of the comparison (e.g., exact matches vs. fuzzy matches).

- Steps to Handle Duplicates: After identifying duplicates, the next steps typically involve deciding whether to keep, merge, or delete them. This decision can depend on the context and the goals of the analysis.

Gallery of Duplicate Detection Methods

Duplicate Detection Image Gallery

FAQs

What is the importance of identifying duplicates in a dataset?

+Identifying duplicates is crucial for ensuring data quality, preventing errors in analysis, and optimizing resource allocation.

How can I highlight duplicates in Microsoft Excel?

+You can use the Conditional Formatting feature or formulas like COUNTIF to highlight duplicates in Excel.

What are some common tools used for duplicate detection?

+Common tools include Microsoft Excel, Python with Pandas, SQL, and various data analysis software like Tableau and Power BI.

In conclusion, identifying and highlighting duplicates is a critical step in data analysis and management. By using the right tools and techniques, individuals can ensure the integrity of their datasets, improve the accuracy of their analyses, and make more informed decisions. Whether through Excel, Python, SQL, or other data analysis tools, the methods outlined above provide a comprehensive approach to detecting and handling duplicates. By applying these methods, professionals and individuals can enhance their data quality, streamline their processes, and achieve their goals more effectively. We invite you to share your experiences with duplicate detection, ask questions, or explore further resources on this topic in the comments below.