Intro

Discover 5 ways to extract data efficiently, using web scraping, data mining, and automated tools, to unlock insights and drive business growth with data extraction techniques and methods.

Extracting data is a crucial step in various fields, including business, research, and technology. The ability to extract relevant information from large datasets, documents, or websites can help organizations make informed decisions, improve operations, and gain a competitive edge. In this article, we will explore five ways to extract data, including their benefits, working mechanisms, and practical examples.

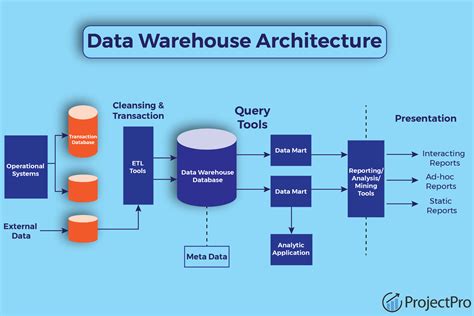

Data extraction is the process of retrieving specific data from a larger dataset, document, or website. This process can be manual or automated, depending on the complexity and size of the data. Manual data extraction involves manually reviewing and copying data from a source, while automated data extraction uses software tools or algorithms to extract data quickly and efficiently. The importance of data extraction lies in its ability to provide valuable insights, improve data quality, and support business decision-making.

As technology advances, new methods for extracting data have emerged, offering greater efficiency, accuracy, and flexibility. These methods include web scraping, data mining, optical character recognition (OCR), natural language processing (NLP), and application programming interfaces (APIs). Each method has its strengths and weaknesses, and the choice of method depends on the specific use case, data source, and desired outcome. In the following sections, we will delve into each of these methods, exploring their benefits, working mechanisms, and practical examples.

Web Scraping

How Web Scraping Works

Web scraping works by sending HTTP requests to a website and parsing the HTML response to extract relevant data. Web scraping tools can be programmed to navigate a website, fill out forms, and extract data from specific pages or sections. The extracted data can then be stored in a database or spreadsheet for further analysis. Web scraping is a powerful tool for data extraction, but it requires careful consideration of website terms of use, data quality, and potential legal issues.Data Mining

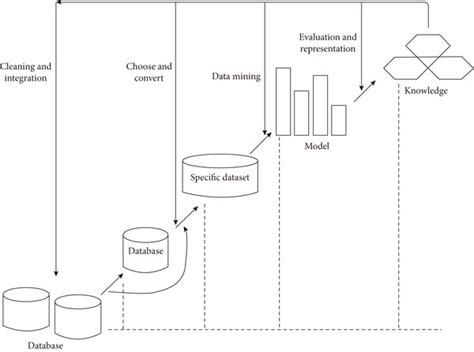

How Data Mining Works

Data mining works by applying algorithms and statistical techniques to large datasets. Data mining tools can be used to identify patterns, relationships, and anomalies in data, as well as to predict future outcomes. The data mining process typically involves data preparation, model building, and model deployment. Data mining is a powerful tool for extracting insights from data, but it requires careful consideration of data quality, model accuracy, and potential biases.Optical Character Recognition (OCR)

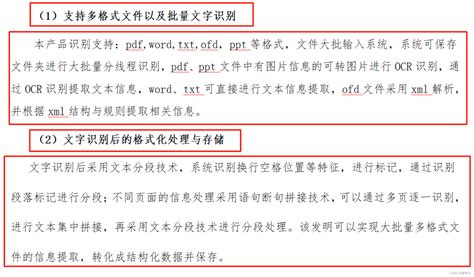

How OCR Works

OCR works by using software algorithms to recognize and extract text from images or scanned documents. OCR tools can be used to extract data from various document formats, including PDF, JPEG, and TIFF. The extracted data can then be stored in a database or spreadsheet for further analysis. OCR is a powerful tool for extracting data from documents, but it requires careful consideration of document quality, font recognition, and potential errors.Natural Language Processing (NLP)

How NLP Works

NLP works by using software algorithms to analyze and extract insights from text data. NLP tools can be used to extract data from various text formats, including social media posts, customer reviews, and online forums. The extracted data can then be stored in a database or spreadsheet for further analysis. NLP is a powerful tool for extracting insights from text data, but it requires careful consideration of language complexity, context, and potential biases.Application Programming Interfaces (APIs)

How APIs Work

APIs work by providing a set of defined rules and protocols for accessing and extracting data from a website, application, or service. API tools can be used to extract data from various sources, including social media platforms, e-commerce websites, and online directories. The extracted data can then be stored in a database or spreadsheet for further analysis. APIs are a powerful tool for extracting data, but they require careful consideration of API terms of use, data quality, and potential security issues.Data Extraction Image Gallery

What is data extraction?

+Data extraction is the process of retrieving specific data from a larger dataset, document, or website.

What are the benefits of data extraction?

+The benefits of data extraction include improved data quality, increased efficiency, and enhanced decision-making.

What are the different methods of data extraction?

+The different methods of data extraction include web scraping, data mining, OCR, NLP, and APIs.

In conclusion, data extraction is a critical process that can help organizations extract valuable insights from large datasets, documents, or websites. The five methods of data extraction discussed in this article - web scraping, data mining, OCR, NLP, and APIs - each have their strengths and weaknesses, and the choice of method depends on the specific use case, data source, and desired outcome. By understanding the benefits and working mechanisms of each method, organizations can make informed decisions about which method to use and how to implement it effectively. We invite you to share your thoughts and experiences with data extraction in the comments section below, and to explore the resources and tools available for extracting data from various sources.