Intro

Finding duplicates in a dataset or a collection of items is a crucial task in various fields, including data analysis, programming, and even everyday life. Duplicates can lead to inaccurate results, wasted resources, and decreased efficiency. In this article, we will explore five ways to find duplicates, discussing their applications, benefits, and step-by-step guides on how to implement them.

The importance of identifying duplicates cannot be overstated. In data analysis, duplicates can skew results, leading to incorrect conclusions. In programming, duplicates can cause bugs and errors, making it essential to remove them to ensure the smooth operation of software and applications. Moreover, in everyday life, finding duplicates can help organize and declutter physical and digital spaces, saving time and resources.

The process of finding duplicates involves comparing items within a dataset or collection to identify identical or very similar entries. This can be done manually for small datasets, but as the size of the dataset increases, manual inspection becomes impractical, and automated methods are preferred. Automated methods use algorithms and software tools to quickly and accurately identify duplicates, making them indispensable for large-scale data processing.

Understanding Duplicates

Before diving into the methods of finding duplicates, it's essential to understand what constitutes a duplicate. A duplicate is an exact or very similar copy of an item. In the context of data, duplicates can refer to identical rows in a database or spreadsheet, while in physical collections, duplicates could be identical items. Understanding the nature of duplicates helps in choosing the most appropriate method for identifying and managing them.

Method 1: Manual Inspection

Manual inspection involves reviewing each item in a dataset or collection to identify duplicates. This method is feasible for small datasets but becomes time-consuming and prone to errors as the dataset size increases. Steps for manual inspection include sorting the data, comparing each item to others, and marking or removing duplicates. While manual inspection can be effective for small-scale applications, it's not practical for large datasets.

Benefits and Limitations

- Benefits: Simple to implement, requires no special software, and can be very accurate if done correctly.

- Limitations: Time-consuming, prone to human error, and not scalable for large datasets.

Method 2: Using Software Tools

Utilizing software tools is a more efficient method for finding duplicates, especially in large datasets. Various software applications, including spreadsheet programs like Microsoft Excel and Google Sheets, offer built-in functions to identify and remove duplicates. Additionally, specialized data analysis software and programming languages like Python and R provide powerful libraries and tools for duplicate detection.

Step-by-Step Guide

- Select the Dataset: Choose the dataset or range of cells you want to check for duplicates.

- Apply the Formula or Function: Depending on the software, apply the appropriate formula or function to highlight or remove duplicates.

- Review and Refine: Review the results, and refine the process as necessary to ensure accuracy.

Method 3: Algorithmic Approach

The algorithmic approach involves using specific algorithms designed to find duplicates efficiently. This method is particularly useful in programming and data analysis. Algorithms can compare items based on various criteria, such as exact matches, similarity, or proximity, making them versatile for different types of data.

Types of Algorithms

- Hashing Algorithms: Useful for finding exact duplicates by assigning a unique hash value to each item.

- Sorting Algorithms: Sorts the data, making it easier to identify adjacent duplicates.

- Clustering Algorithms: Groups similar items together, which can help in identifying duplicates based on similarity.

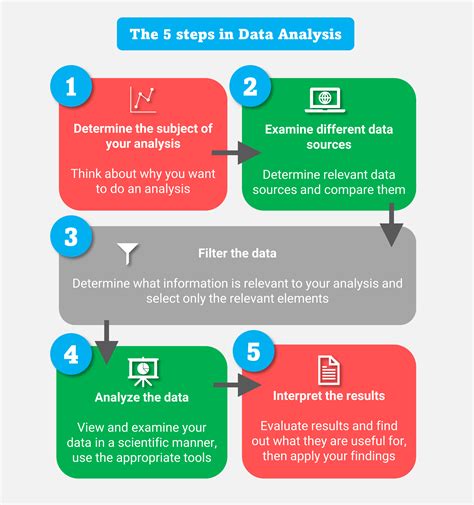

Method 4: Data Visualization

Data visualization involves representing data in a graphical format to better understand and identify patterns, including duplicates. By visualizing data, duplicates can become apparent, especially when using charts, graphs, or maps that highlight repetition or similarity.

Benefits of Visualization

- Easy Identification: Duplicates can be easily spotted in visual representations.

- Pattern Recognition: Helps in recognizing patterns that might indicate duplicates.

- Interactive: Many visualization tools allow for interactive exploration, making it easier to investigate potential duplicates.

Method 5: Machine Learning

Machine learning techniques can be employed to find duplicates, especially in complex datasets where traditional methods may fail. Machine learning models can be trained to recognize patterns and anomalies, including duplicates, based on historical data.

Applications of Machine Learning

- Duplicate Detection: Trained models can automatically detect duplicates with high accuracy.

- Data Cleaning: Machine learning can be part of a broader data cleaning process, ensuring data quality and integrity.

- Real-Time Processing: Can be used for real-time duplicate detection in streaming data or live systems.

Duplicate Detection Image Gallery

What are the common methods for finding duplicates in a dataset?

+Common methods include manual inspection, using software tools, algorithmic approaches, data visualization, and machine learning techniques.

Why is it important to remove duplicates from a dataset?

+Removing duplicates is crucial because they can lead to inaccurate analysis results, waste resources, and decrease the efficiency of data processing.

How does data visualization help in finding duplicates?

+Data visualization represents data in a graphical format, making it easier to spot duplicates and understand patterns within the data.

In conclusion, finding duplicates is a vital process that ensures the accuracy, efficiency, and reliability of data and systems. Whether through manual inspection, software tools, algorithmic approaches, data visualization, or machine learning, each method has its benefits and applications. By understanding and applying these methods appropriately, individuals and organizations can improve data quality, reduce errors, and enhance overall performance. We invite readers to share their experiences and tips on finding duplicates, and we look forward to your comments and questions on this topic.